- AI usage is concentrated in software development and technical writing tasks, and roughly 36% of users see AI as applicable to at least 25% of their associated tasks.

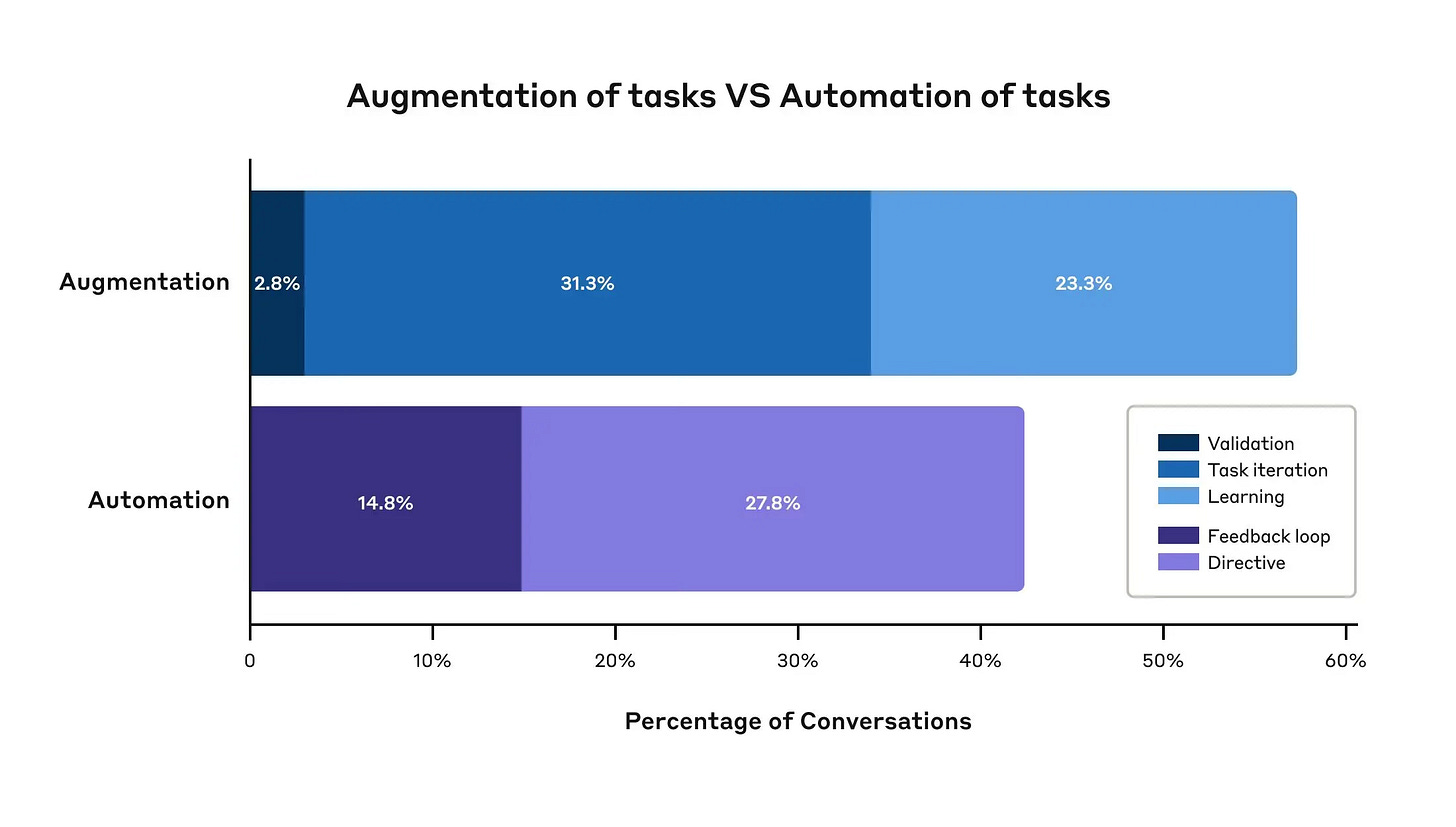

- AI use leans more towards augmentation (57%), where AI collaborates with and enhances human capabilities, compared to automation (43%), where AI directly performs tasks.

- AI use is more prevalent for tasks associated with mid-to-high wage occupations like computer programmers and data scientists, but is lower for both the lowest- and highest-paid roles.

source: Anthropic Economic Index

That last point from Anthropic’s report about software use cases for AI being mostly in the middle tier of roles is an interesting one. Tim O’Reilly recently shared a thoughtful analysis of AI’s impact on programming and – echoing Jevon’s paradox – concluded that AI’s impact on programming will end up creating more programmers, as barriers to entry are lowered.

Here are some other links and stories that caught our attention this week.

Beyond Office Automation

Whilst some of the easiest and most cost-reducing use cases for enterprise AI are to be found in office automation, centaur teams, knowledge engineering and general process work, progress in these areas is slower than hoped for in many organisations due to management inertia and the difficulty of breaking old, manual work habits.

For example, I was alarmed to read a new paper mentioned by Ethan Mollick that defines a method for sending AI delegates to meetings that use your voice and knowledge to represent you in yet another pointless waste of time important corporate discussions. This gives off real Severance ‘innie’ vibes, but shows that without leaders committed to re-thinking work in the age of algorithms, there is a risk companies will end up using AI to automate old ways of working instead, like a Victorian steampunk sci-fi story.

Progress in specialist knowledge work, such as financial and professional services, is happening slightly faster, although this also suffers from structural constraints (e.g. the way partnership structures operate and billing methods that can disincentivise efficient delivery). Lawyer Monthly shared a good introduction to legal use cases recently, and there is a lot of similar thinking going on across the professional services sector.

But it is interesting to see more evidence of faster progress in physical use cases such as industry, healthcare and defence:

- Biopharma: McKinsey published an insights piece about how biopharma firms can integrate their IT stack and overcome legacy challenges to create a modern platform for AI to enhance clinical development and trials.

- Industry: Capgemini Engineering’s CTO for Manufacturing and Industrial Operations shared his advice on how to enable multi-agent AI systems in the factory, and how to balance generic and specialised systems to optimise production

- Defence: The Kyiv Independent shared an inside story of Ukraine’s first successful all-drone assault on occupying Russian positions, which marks a milestone in drone warfare and defence technology more generally

People often share the misconception that factories and facilities don’t change their ways of working in response to new technology, but in fact they are often quicker to embrace new approaches than corporate managers in the comfort of the office. Perhaps this is because they have clearly testable objectives and fitness functions, whereas office work can be more nebulous, bureaucratic and performative due to a culture of excessive managerialism.

The Agentic OS and Moving Beyond Chatbots

In the Workforce Futurist newsletter, Matteo Cellini considers the future of agents as an operating system inside the firm and whether these will take over the organisation or perhaps lead to the rise of highly-empowered super operators:

The operating system itself could be transformed. Instead of desktops and windows, AI could generate interfaces on demand, adapting to specific needs. Content would be dynamically generated or retrieved. Interaction could also evolve, moving beyond traditional input methods to include conversational language, sign language, and even subtle body movements. Imagine simply glancing at something and gesturing vaguely, with the AI understanding your intent. This intuitive interaction promises a future where computers truly anticipate our needs.

In a similar vein, Geoffrey Huntley has written a provocative piece about the emergence of so-called high-agency people entitled The future belongs to idea guys who can just do things (I assume he means guys in the colloquial non-gendered sense). He makes the point that we are headed waaaay beyond developers having a polite AI assistant that can help with things and towards a reality where agentic AI can blast through an entire backlog at once, arguing that the most urgent task for leaders is to help people overcome their fear or future shock, and learn how to free their thinking to take advantage of this potential superpower.

“Ya know that old saying ideas are cheap and execution is everything? Well it’s being flipped on it’s head by AI. Execution is now cheap. All that matters now is brand, distribution, ideas and retaining people who get it. The entire concept of time and delivery pace is different now.”

Meanwhile, back at the ranch, the WSJ reports that agents are everywhere and nowhere, in the sense that everybody is talking about them, but few companies have really got their hands around their practical use in production.

While 61% of attendees at the summit said they’re experimenting with AI agents, 21% said they’re not using them at all. And, their most pressing concern around the technology is a lack of reliability, the poll found.

Obviously, it doesn’t yet make sense to rely on ‘one-shot’ prompting an AI assistant or proto-agent to perform tasks that demand accuracy and reliability in a corporate environment, depending on the quality of the training and reinforcement learning applied. And perhaps we should not just be thinking of enterprise AI agents as chatbots or assistants for the most part, as we have with GenAI to date. Most agents will probably be headless, and not very chatty, but they will be orchestrated, tested and validated by higher level agents, one of which we will talk to in order to get things done.

Chip Huyen’s overview of agents and what they can do is a great introduction if you want to see the path beyond simple chatbot assistants.

Luke Wrobelski, who is very thoughtful on user experience and interfaces, as well as being an early pioneer adopter of GenAI for knowledge synthesis, thinks that we should also move beyond the polite, helpful assistant model of interaction and try some other modes that are also useful in helping us learn new things:

Could alternative frameworks create new possibilities for AI interaction? Education researcher Nicholas Burbules identified four main forms of dialogue back in the early nineties that could provide alternatives: inquiry, conversation, instruction, and debate.

Existing learning approaches and delivery within companies (and arguably schools and academia as well) are clearly not yet able to do the work that Huntley has in mind above, to develop AI-enhanced superpowers, curiosity and confidence, nor are they using AI in the way Luke Wrobelski hints at in terms of dialogic styles and methods. This will be an important topic we will pick up further in future editions, looking at how to use AI to create abundant human intelligence, rather than just to do the thinking for us.

But perhaps that is wishful thinking that will be forgotten in the current race towards AI-enhanced idiocracy?

But Don’t Discount the Continuing Influence of Artificial General Stupidity

A novel AI risk vector is emerging, rather predictably, in the new USA that might give organisations cause for concern in the future: tweaking feedback mechanisms to politicise knowledge. Wired shared a piece last week showing how DOGE-aligned engineers were considering how to filter AI results by political affiliation, with the intention of aligning AI-produced knowledge with the biases of the new regime. Elon Musk later shared an example of how “based” his new Grok AI model will be:

screenshot from X about Grok

A XA salient reminder that for all our fears of the risks of AI super-intelligence, artificial human stupidity remains a far greater problem. Perhaps we need more research on this?

Satirical post on X from Tomáš Daniš