The debate about AI risks and potential harms is starting to bifurcate into the kind of toxic polarisation we are now used to seeing in politics, economics and other areas of life, amplified by online echo chambers. In addition to technical critics like Gary Marcus, there is also a quietly growing kind of ‘left-luddite resistance’ to AI, and in surveys more people are worried about AI than before the launch of ChatGPT.

Those of us who are excited by the empowering potential of AI, despite the obvious risks and problems, cannot just ignore or refuse to engage with these critiques if we want to deploy these technologies responsibly in the real world.

But ultimately, despite the growing fears associated with consumer AI, I think it is enterprise AI that gives us the chance to try out human-scale agentic AI and automation within safer, small world networks, and this is where we will really learn how to thrive alongside our bot buddies.

Enterprise vs. consumer AI risk tolerance

As with previous waves of technology-led change, we are in a position today where we are perhaps too risk-averse in pursuing organisational productivity use cases for enterprise AI, and yet too casual about the far more diffuse risks of consumer AI. The former could harm a company or a department if it goes wrong; but the latter can cause widespread harm to society, and especially young people.

As an example, Wired recently published a roundup on the growing AI backlash:

This frustration over AI’s steady creep has breached the container of social media and started manifesting more in the real world. Parents I talk to are concerned about AI use impacting their child’s mental health. Couples are worried about chatbot addictions driving a wedge in their relationships. Rural communities are incensed that the newly built data centers required to power these AI tools are kept humming by generators that burn fossil fuels, polluting their air, water, and soil. As a whole, the benefits of AI seem esoteric and underwhelming while the harms feel transformative and immediate.

AI is already changing the web in major ways, as Matteo Wong wrote in the Atlantic, and this carries societal risks:

Reorienting the internet and society around imperfect and relatively untested products is not the inevitable result of scientific and technological progress—it is an active choice Silicon Valley is making, every day. That future web is one in which most people and organizations depend on AI for most tasks. This would mean an internet in which every search, set of directions, dinner recommendation, event synopsis, voicemail summary, and email is a tiny bit suspect; in which digital services that essentially worked in the 2010s are just a little bit unreliable. And while minor inconveniences for individual users may be fine, even amusing, an AI bot taking incorrect notes during a doctor visit, or generating an incorrect treatment plan, is not.

And, sadly, it is impossible to think about these issues without reflecting on the disastrous impact that the monetisation of attention has had on public discourse, society and politics, especially in the United States. It is hard enough to know who or what to trust online today, even before we see what consumer AI can do in the hands of bad actors.

In Zoe Scarman’s field notes from recent Silicon Valley meetings, she highlights the shift towards big consumer LLMs as superapps that mediate relations with other firms, brands and content, as well as longer-term trends such as moving beyond phones as access devices and eventually seeing physical robot tech catch up with their AI brains.

There are many good reasons to be sceptical about the rise of LLMs as superapps that seek to mediate our relationships with knowledge, the internet and each other.

For me, the main worries are really a continuation of what ruined the social web: attention farming driven by commercial needs and emotional manipulation. You can quit Twitter, ignore Instagram and leave Facebook to the angry, radicalised old people, but if AI mediates access to more important functions, then the impact could be a lot worse.

Seeing Grok tweaked to become more politically supportive of Musk’s personal beliefs is reason enough never to trust this LLM; but it also makes you wonder how much similar tweaking goes on in reinforcement learning (RLHF) processes used by other LLMs, regardless of how well-intentioned that might sometimes be.

Some worries might be overstated, or involve applying old assumptions to new ways of working. There are valid concerns about the cognitive impact of over-relying on magic machines to write and think for us. But young minds are also very adaptable and young people today seem more sensible than my generation, so perhaps we should leave it to them to find the right balance. For example, I find it intriguing to see reports of so-called GenZ folk using AI as a team of advisors in their life, and probably too early to judge whether this is a good or a bad thing.

100k fruit flies versus a super-intelligent gorilla

The dream of a kind of ambient super intelligence is driving a lot of investment and innovation in the consumer AI field, and perhaps this is a worthy goal whose products could enhance or enrich peoples’ lives.

Or perhaps not.

But for now at least, that dream remains distant for any meaningful definition of intelligence.

There was an interesting debate on Reddit this week about applying Tononi’s Integrated Information Theory of Consciousness to AI to ascertain whether AI has already reached the consciousness level of a fruit fly. My own view on this is that something needs to be alive and seeking to remain so to possess conscious intelligence, and whilst I find AI to be an impressive simulacrum of intelligence, I believe we have more than enough biological intelligence being produced cheaply across the world that is woefully underused right now, so perhaps we should deploy our simulacra to enhance or augment this capability before we get too carried away about replacing it.

There is a lot that consumer AI can do already to augment human intelligence and learning, and to help us create value, however we define it.

But the reason we are focused on collaborative uses of AI technology within organisations is because this is where the greatest social and economic value is to be found. This value might be diffused across industries, geographies and millions of individual firms rather than captured by a handful of dominant vendors, but it could have a greater and more positive impact than the consumer field overall.

Human-run Systems as Software

Organisations are a form of human software that bring us together to solve problems, make things and enrich our lives, and AI has the potential to upgrade them in exciting and interesting ways that could expand the field of the possible.

Even if critics see AI today as little more than sparkling machine learning and automation, it has the potential to help us move beyond the legacy of feudal management practices and stultifying bureaucracy that hold back human creativity and innovation. And by using AI as helpers, assistants and agents, we can dramatically reduce both the time overhead and cost of coordinating work. Small teams of contributors will soon be able to create value for themselves and others without the bloated structures that surround them today.

One of the most delightful and useful ideas that emerged from the era of social computing in the enterprise was the wiki way: if you see something that is wrong, correct it. What excites me about agentic AI is partly the idea that we can build on this to say if you see something missing, build it. As Zoe Scarman remarked about AI’s role in enabling citizen developers in her field notes quoted above:

The real risk isn’t letting non-tech people build things. The real risk is forcing every idea to stand in line behind an IT backlog … The valley’s message was clear: Build guardrails, not gatekeepers.

None of this needs superintelligence. Small, simple agents using already existing LLM-level capabilities are more than enough to run a system of agentic automation under human oversight and control (see also Small is Beautiful: Cats vs Megamind).

We just (sic) need to codify and map our operations to create explainable rulesets that agents can follow, and then focus on the constitutional guardrails to keep them on track, as Anthropic are now exploring.

Keeping things small scale means keeping things at a human scale, and every single lesson we have learned about the dangers of social media or consumer AI teaches us that small world networks where people share common purpose are orders of magnitude safer and more constructive than, say, Elon-era Twitter.

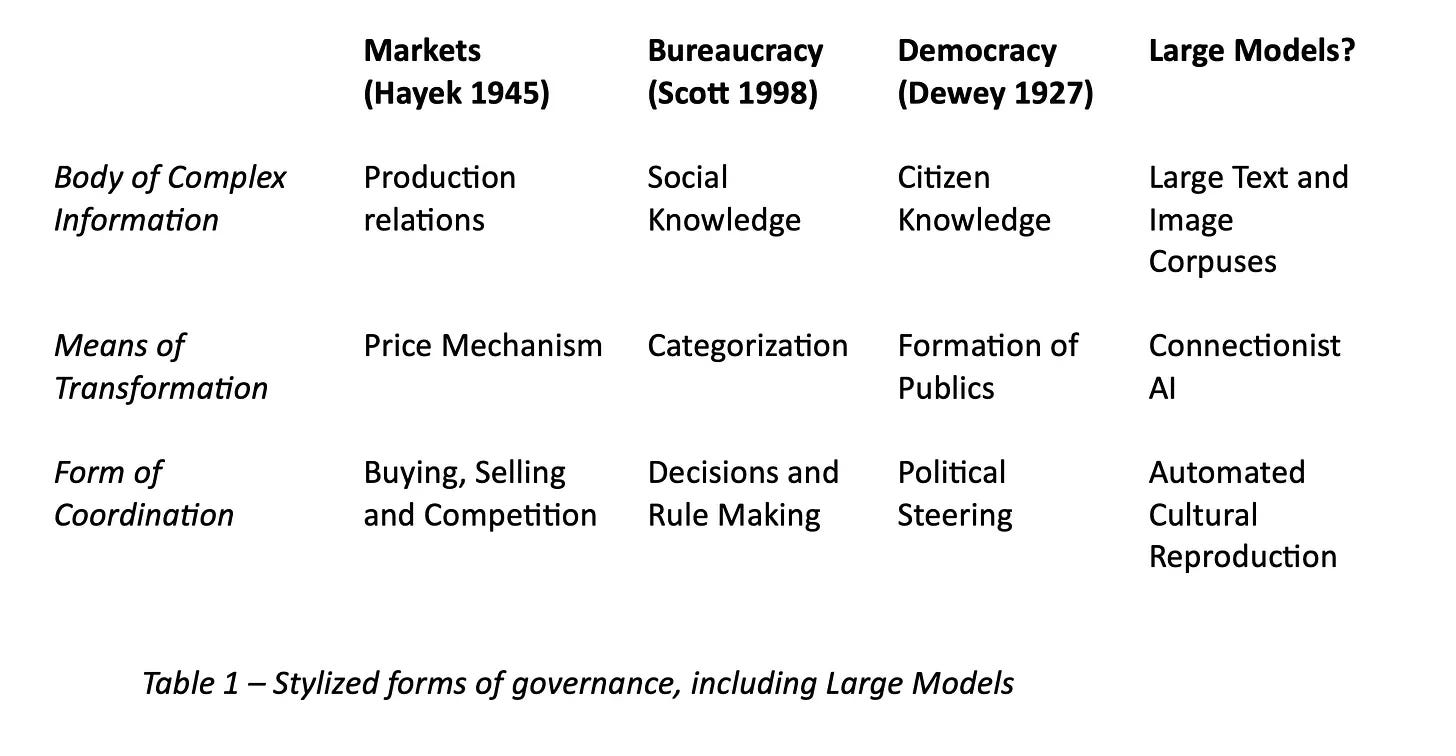

There is so much potential in the pursuit of systems as software, populated by a hybrid structure of people, software and AI agents, but very much guided by human programming and oversight, and governed according to transparent and explainable rulesets. I am still grappling with the many references in Henry Farrell’s fascinating and extensive synthesis of ‘AI as Governance’, which starts from the field of political science and expands outwards into adjacent areas of enquiry. There is much to explore here about how we might govern, program and guide human systems with the assistance of AI capabilities that is highly relevant to enterprise AI.

Would love to hear what you think about the hype, the fears, the possible divergence of enterprise and consumer AI tech, and the potential for AI governance of systems as software…